Hey back at it – this isn’t a deep post, just wanted to share some fun I had running local inference on a Macbook Pro M2.

So, lets get started on oobabooga text-generation-webui, which is:

“A Gradio web UI for Large Language Models. Its goal is to become the AUTOMATIC1111/stable-diffusion-webui of text generation.”

Github: https://github.com/oobabooga/text-generation-webui

Lets set it up:

git clone git@github.com:oobabooga/text-generation-webui.git

cd text-generation-webui

python3 -m venv venv

source venv/bin/activate

pip install -r requirements.txt

./start_macosxAfter reading deeper into the README, I should have used requirements_apple_silicon.txt but it was smart enough to pick this up, yah!

After installation, the local server is running on: http://127.0.0.1:7860

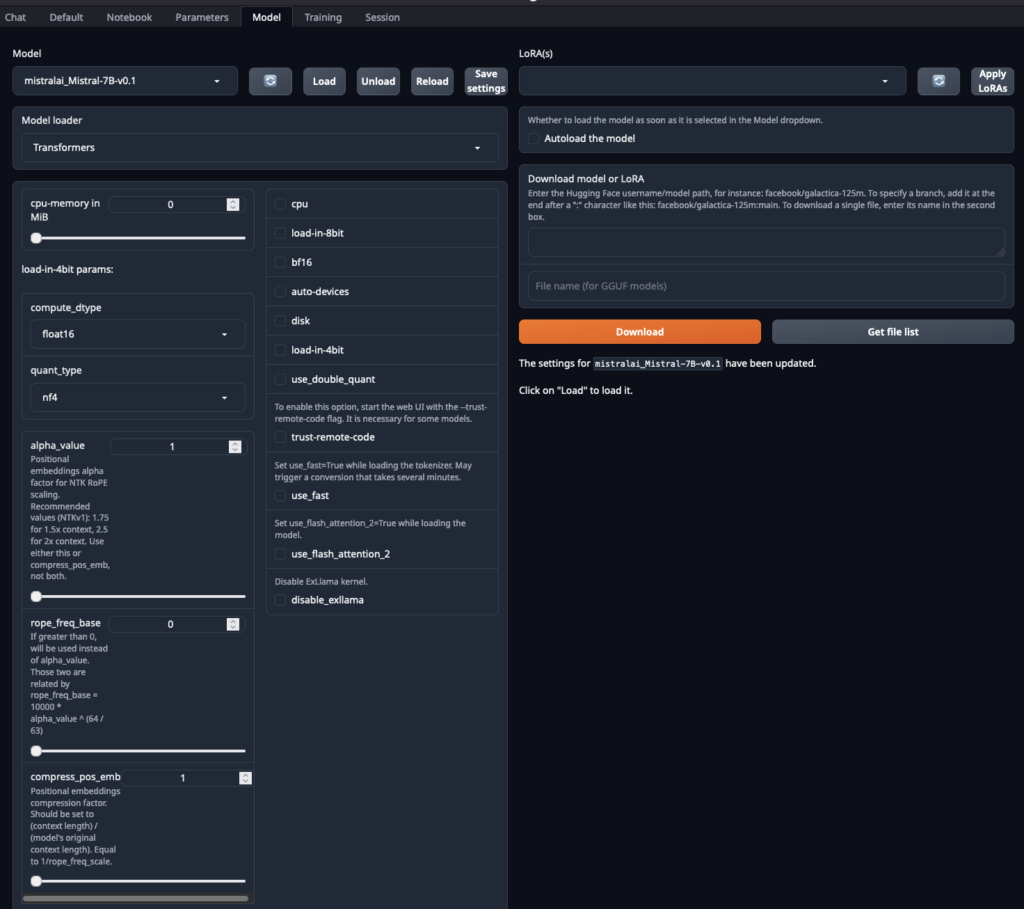

No model is loaded, so grab one – navigate to “Model” tab and enter in “Download model or LoRA”: mistralai/Mistral-7B-v0.1 (https://huggingface.co/mistralai/Mistral-7B-v0.1)

Refresh and load the model from the “Model” dropdown.

Now you can navigate to “Default” and start chatting with your LLM. In the terminal window you’ll get stats on token/sec generation, etc.

While experimenting I noticed the response tokens getting truncated, the default is 200 (on my local) but I bumped to 500 in Parameters→Generation→max_new_tokens = 500

So overall this model isn’t nearly as good as GPT for basic stuff, asking questions, generating code, but I’ll continue to hack on it.

In the meantime, lets try what all the folks on Reddit are going wild over – uncensored models. I did some googling and many recommended Aeala/VicUnlocked-alpaca-65b-4bit (https://huggingface.co/Aeala/VicUnlocked-alpaca-65b-4bit)

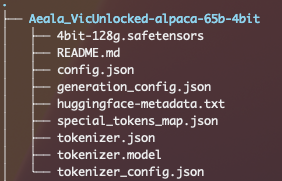

Go back to “Model” and enter Aeala/VicUnlocked-alpaca-65b-4bit – these are big files so they take a bit, you can you monitor download progress in terminal. Models are placed in text-generation-webui/models

It’s pretty funny what you can ask of these models. Give it a try!

Also, bit of a sidenote – had to watch a data transfer tonight and I found out df in OSX isn’t accurate (for those coming from *nix), instead you use something like:

diskutil info /dev/disk3s1s1 | grep "Free"Which is useful to stick in the watch command and have open in a terminal:

watch -n 5 'diskutil info /dev/disk3s1s1 | grep "Free"'Until next time, sk out!